This document details the use of the graphs provided by the vSAN performance service. The vSAN performance service provides end-to-end visibility into vSAN performance. With metrics accessible by the vSphere Web Client, it further enhances an administrators view into the vSAN storage environment.

Front-end vs Back-end

Many of the performance graphs refer to front-end and back-end. Virtual machines are considered front-end – where the application on the virtual machine reads and writes to disk, generating say 100 IOPS. Backend traffic refers to the underlying objects – where the same VM, configuring in a RAID-1 configuration, would generate that same 100 IOPS across both replicas, thus totaling 200 back-end IOPS.

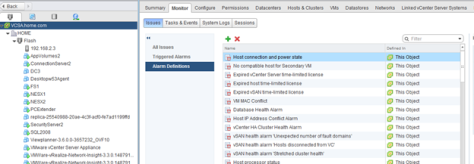

Cluster Level

These views provide insight into the front-end and back-end performance and utilization at the cluster level.

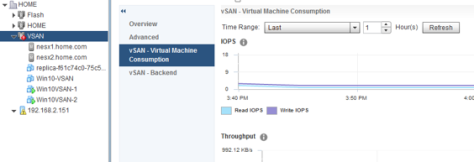

vSAN – Virtual Machine consumption

This set of graphs provides a front-end view of all virtual machines in the cluster.

Graphs

- IOPS – IOPS consumed by all vSAN client in the cluster, including virtual machines & stats objects

- Throughput – Throughput of all vSAN client in the cluster, including virtual machines & stats objects

- Latency – Average latency of IOs generated by all vSAN clients in the cluster, including virtual machines & stats objects

- Congestion – Congestion of IOs generated by all vSAN clients in the cluster including virtual machines & stats objects

- Outstanding IO – Outstanding IO from all vSAN clients in the cluster, including virtual machines & stats objects

vSAN – Backend

This section provides a glimpse into the backend of the vSAN cluster.

Graphs

- IOPS – vSAN Cluster Backend IOPS

- Throughput – vSAN Cluster Backend Throughput

- Latency – vSAN Cluster Backend Latency

- Congestion – vSAN Cluster Backend Congestion

- Outstanding IO – vSAN Cluster Backend Outstanding IO

Host Level

Similar to the Cluster view, the host view provides insight into the front-end and back-end performance and utilization, except at the host level. Given that the ESXi host is the foundation building block of a vSAN cluster, these views provide insight into the indidual disk groups and disks, as well as the hardware and software adapters used by vSAN.

vSAN – Virtual Machine Consumption

This set of graphs provides a front-end view of all virtual machines on the host.

Graphs

- IOPS – IOPS consumed by all vSAN client on the host, including virtual machines and stats objects

- Throughput – throughput of all vSAN client on the host, including virtual machines and stats objects

- Latency – latency of all vSAN client on the host, including virtual machines and stats objects

- Local Client Cache Hit IOPS – Average local client cache read IOPS

- Local Client Cache Hit Rate – Percentage of read IOs which could be satisfied by the local client cache

- Congestions – Congestion of all vSAN client on the host, including virtual machines and stats objects

- Outstanding IO – Outstanding IO for all vSAN client on the host, including virtual machines and stats objects

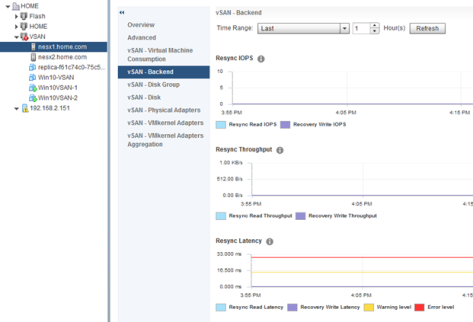

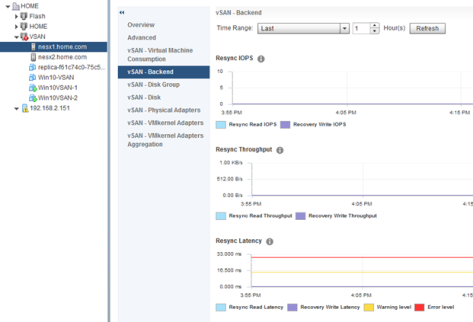

vSAN – Backend

This section provides a glimpse into the backend of the vSAN host.

Resync Metrics

The resync metrics include traffic/load created by operations initiated automatically, or by an administrator. These operations include changes in policy, the repair of objects, maintenance mode and/or related evacuations, and rebalance operations whether manually initiated or automatic. The metrics in the graphs also detail what was the cause of the resync operation, which can be helpful when trying to determine the impact of maintenance mode, and rebalance operations.

Graphs

- IOPS – vSAN host Backend IOPS

- Throughput – vSAN host Backend Throughput

- Latency – vSAN host Backend Latency

- Resync IOPS – IOPS consumed by resync operation

- Resync Throughput – Throughput of resync operations

- Resync Latency – Latency of resync operations

- Congestions – vSAN host Backend Congestion

- Outstanding IO – vSAN host Backend Outstanding IO

vSAN – Disk Group

This view enables an administrator to review read and write performance on the level of the individual disk group. If activity or latency is occurring on a disk group, vCenter will show you in this section.

Graphs

- Frontend(Guest) IOPS – vSAN disk group (cache tier disk) front-end IOPS

- Frontend(Guest) Throughput – vSAN disk group (cache tier disk) front-end Throughput

- Frontend(Guest) Latency – vSAN disk group (cache tier disk) front-end Latency

- Overhead IOPS – vSAN disk group (cache tier disk) overhead IOPS

- Overhead IO Latency – vSAN disk group (cache tier disk) overhead latency

- Read Cache Hit Rate – vSAN disk group (cache tier disk) read cache hit rate

- Evictions– vSAN disk group (cache tier disk) evictions

- Write Buffer Free Percentage– vSAN disk group (cache tier disk) write buffer free percentage

- Capacity and Usage–vSAN disk group capacity and usage

- Cache Disk de-stage rate – The throughput of the data de-staging from cache disk to capacity disk

- Congestions – vSAN disk group congestion

- Outstanding IO – The outstanding write IO of disk groups

- Outstanding IO Size – The outstanding write IO size of disk groups

- Delayed IO Percentage – Percentage of IOs which go through vSAN internal queues

- Delayed IO Average Latency – The average latency of IOs which go through vSAN internal queues

- Delayed IOPS – The IOPS of delayed IOs which go through vSAN internal queues

- Delayed IO Throughput – The throughput of delayed IOs which go through vSAN internal queues

- Resync IOPS – vSAN disk group level IOPS of resync traffic

- Resync Throughput – vSAN disk group level throughput of resync traffic

- Resync Latency – vSAN disk group level average latency of resync traffic

vSAN – Disk

This view enables an administrator to review read and write performance on the level of the individual disk, whether it is the cache disk, or the capacity disk.

Graphs

- Physical/Firmware Layer IOPS – vSAN cache/capacity tier disk physical IOPS at the firmware level

- Physical/Firmware Layer Throughput – vSAN cache/capacity tier physical throughput at the firmware level

- Physical/Firmware Layer Latency – vSAN cache/capacity tier disk physical latency at the firmware level

- vSAN Layer IOPS – Capacity tier disk vSAN layer IOPS

- vSAN Layer Latency – Capacity Tier disk vSAN layer latency

vSAN – Physical Adapters

This view enables an administrator to review inbound and outbound performance on the level of the individual physical network adapter.

Graphs

- pNIC Throughput – Physical NIC throughput

- pNIC Packets Per Second – Physical NIC packets per seconds

- pNIC Packets Loss Rate – Physical NIC packet loss

vSAN – Vmkernel Adapters

This view enables an administrator to review inbound and outbound performance on the level of the individual VMkernel adapter.

Graphs

- vMKernal Network Adapter Throughput – Vmkernel write throughput

- VMkernel Network Adapter Packets Per Second – Vmkernel packets per second

- VMkernel Network Adapter Packets Loss Rate – Vmkernel packet loss

vSAN – Vmkernel Adapters Aggregation

This view enables an administrator to review the aggregated inbound and outbound performance on all VMkernel adapters in a host.

Graphs

- vSAN Host Network I/O Throughput – Host throughput for all VMkernel network adapters enabled for vSAN traffic.

- vSAN Host Packets Per Second – Host packets per second for all VMkernel network adapters enabled for vSAN traffic.

- vSAN Host Packets Loss Rate – Host packet loss for all VMkernel network adapters enabled for vSAN traffic.

VM Level

These views provide insight into the front-end and back-end performance and utilization at the VM level.

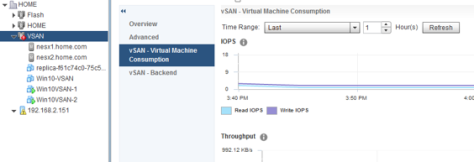

vSAN – Virtual Machine Consumption

This section displays metrics of the individual VM.

Graphs

- IOPS – VM IOPS

- Throughput – VM Throughput

- Latency – VM Latency

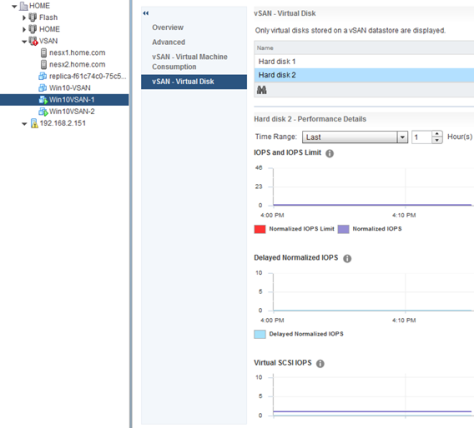

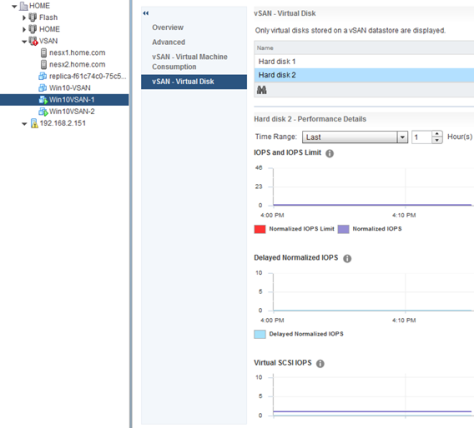

vSAN – Virtual Disk

This section shows metrics at the level of the virtual disk. The granularity of this level enables an administrator to look at the specific disk in question, at the VSCSI level of said disk.

Graphs

- IOPS and IOPS Limits – normalized IOPS for a virtual disk. If an IOPS limit has been applied via policy, then the graph will also show the limit.

- Delayed Normalized IOPS- Normailzed IOPS for the IOs that are delayed due to the application of the IOPS limit – this shows the impact of the limit.

- Virtual SCSI IOPS – IOPS measured at the VSCSI layer for the individual disk

- Virtual SCSI Throughput – Throughput measured at the VSCSI layer for the individual disk

- Virtual SCSI Latency – Latency measured at the VSCSI layer for the individual disk