Introduction

In a previous article, the topic of discussion was leveraging flash for a virtual infrastructure. Some environments today are still not fully virtualized; this is typically because the last workloads to be virtualized are the systems with high horsepower application requirements, such as SQL and Oracle. These types of workloads can benefit greatly from flash-based caching. While many hardware/software combo solutions do exist for in-OS caching in non-virtualized environments, they will be covered in a separate article. This article focuses on the QLogic host bus adapter-based caching solution FabricCache.

How it works

QLogic’s take on using flash technology to accelerate SAN storage is to place the cache directly on the SAN, in their HBA’s, viewing cache as a shared SAN resource.

Overview

The Qlogic FabricCache 10000 Series Adapter consists of an FC HBA and a PCI-e Flash device. These two adapters are ribbon-connected, providing the HBA with direct access to the PCI-e Flash device, as the PCI bus provides only power to the flash card. While some consider this a two-card solution, keep in mind that if you have a flash PCI adapter in the host to accelerate data traffic, the HBA will also exist. That being said, the existing HBA cannot be re-used with the FabricCache solution.

Caching

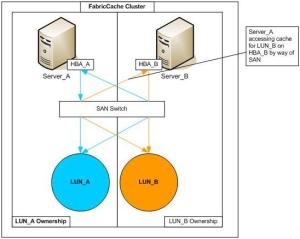

A LUN is pinned to a specific HBA/Cache – all hosts that need to access the cached data for the LUN in question access it from the FabricCache adapter to which the LUN is pinned. For example: Server_A and Server_B are two ESX hosts in a cluster. Datastore_A is on LUN_A and Datastore_B is on LUN_B. LUN_A is tied to HBA_A on Server_A, while LUN_B is tied to HBA_B on Server_B. All of the cached data for LUN_A will be on the cache on HBA_A, so if a VM on Server_B is running on LUN_A, its cache resides on HBA_A on Server_A. A cache miss results in storage array access. In this case, the host would access the cache across the SAN, to the neighboring host. For this reason, each adapter must be in the same zone to be able to see other caches in the FabricCache cluster.

In a FabricCache cluster, all data access is in-band between the HBAs on the fabric, with no cache visibility by the host operating system or hypervisor, or the HBA driver. QLogic’s FabricCache is a write through caching, where all writes are sent to through the cache, requiring acknowledgement from the array before acknowledging to the operating system that the write operation is complete. The lack of write caching is based on the fact that 80% of SAN traffic is reads, thus accelerating the largest portion of SAN traffic.

The technology can be managed by a command line using QLogic Host CLI, but there is also a GUI available called the QConvergeConsole. There is also a plug-in available for VMware’s vCenter. In this interface, an administrator can target specific LUNs to cache, and assign LUNs to the specific HBA cache that should be used.

When and Why FabricCache

In my opinion, the biggest reason to use FabricCache is the pricing model. Since the solution is all hardware, there is no software maintenance required on an annual basis. That being said, there is a hardware warranty that must be purchased the first year, but typically that cost can be capitalized, thus resulting in an OpEx free solution. Ongoing maintenance, at least when I checked, is not required.

Once you reach 5 systems with the adapter, the annual maintenance cost for those 5 systems is equal to the cost of purchasing a stand-by adapter and keeping it on the shelf in case of failure, thus avoiding the recurring maintenance costs.

A few scenarios that I believe this technology is a great fit are where the supportability of a cache solution, lifespan of the flash, and recurring cost of the solution are paramount to the decision. These areas are discussed in greater detail in the sections below.

Supportability

- No software aside from HBA driver – this is very beneficial in that there is no risk of 3rd party software acting as a shim into your operating system. Many times, custom written cache drivers are only supported by the manufacturer of the software, and not thoroughly tested by the operating system/hypervisor vendor. This can result in complications when upgrading, or integrating with other pieces of software.

- Physical Clusters – Since cache is part of the SAN, cache will still be available during failover.

- Non-VMware Hypervisors – Many hypervisor products do not have host-based cache products available. Since this solution is all hardware, with a simple operating system driver for the HBA, this makes it a good candidate for systems that do not have a cache product available for them.

Longer Life/ Lower Cost

- Uses SLC flash – SLC flash typically lasts about five to ten times longer than MLC or eMLC flash. SLC flash also has typically twice the performance. The result is faster cache access, and reduced time until failure.

- Slightly higher CapEx due to cost of flash, but gives the option to avoid OpEx

When Not and Why Not FabricCache

There are also a few cases where I would avoid this solution in favor of an in-OS or in-guest solution. These cases are listed below.

- Virtual Environment – Since the HBA owns the LUN, as shown in the diagram earlier in this article, all VM’s would need to be on the same host to have access to local cache. In the case of a vMotion, the host would need to access the cache on another host since the VM has moved.

- Many organizations use two HBA’s in a single host – each HBA connecting to a separate switch, for resiliency in the event of an HBA failure. This configuration would require the purchase of two FabricCache adapters, which makes the price almost unpalatable.

Another notable item with this solution is that in the event of a server in the FabricCache cluster becoming unavailable, no cache acceleration will be available for the LUNs serviced by the FabricCache adapter on the failed host.

Conclusion

This article focuses on a host bus adapter-based caching solution, QLogic’s FabricCache, how it works, and where I see it as being a good fit into an infrastructure.